Next: Conditions for the the

Up: Simultaneous (successive) Over Relaxation

Previous: Jacobi, Gauss-Seidel Method

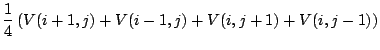

Whereas our principal mathematical justification for an iterative

averaging comes from the mean value property, the behavior of the

iteration in terms of convergence must ultimately come from the properties

of the numerical methods. In this section we will look closer at the

mathematical details of our iterative processes with the ultimate goal of

finding the optimal SOR coefficient.

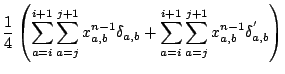

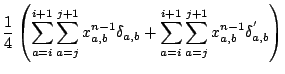

We can generalize the Jacobi method by differentiating for boundary conditions,

Here  selects those values which are non-boundary values, and

selects those values which are non-boundary values, and  indicates

those which are boundary conditions.

indicates

those which are boundary conditions.

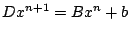

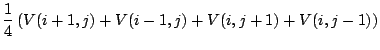

The above equation can be generalized by writing, for the Jacobi case,

|

(3.4) |

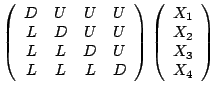

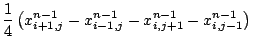

For the GS case, we use the new values as they become available whereby,

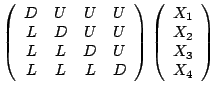

The reader can convince themselves that L is a lower triangular

matrix and U an upper triangular, where the Jacobi form could be simplified

as, for example,

|

(3.5) |

That L is a triangular matrix and D is uniformly nonzero along the

diagonal means that the determinant of D-L will not be zero, whereby

D-L is invertible. Thus we have,

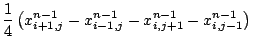

SOR has us calculate the difference between the new value of the

potential we are calculating as recommended by GS, and the old value

pre-calculation. Calling this difference Q, SOR then adds wQ to the

old coefficients, where w is the SOR coefficient to be determined for

optimal progress ( is simply GS,

is simply GS,  is SOR). Thus in matrix

form, the SOR method can be written

is SOR). Thus in matrix

form, the SOR method can be written

And obvious substitution is

from which we get

![$\displaystyle x[k+1]=Hx[k]+(D-wL)^{-1}wb$](img42.png) |

(3.8) |

The reader is encouraged to view reference [2],[3]

for a more detailed exposition, as we take more than a few liberties of

omitting detail here.

Subsections

Next: Conditions for the the

Up: Simultaneous (successive) Over Relaxation

Previous: Jacobi, Gauss-Seidel Method

Timothy Jones

2006-02-24

![]() selects those values which are non-boundary values, and

selects those values which are non-boundary values, and ![]() indicates

those which are boundary conditions.

indicates

those which are boundary conditions.

![]() is simply GS,

is simply GS, ![]() is SOR). Thus in matrix

form, the SOR method can be written

is SOR). Thus in matrix

form, the SOR method can be written