Next: A race: Jacobi verses

Up: A More Fundamental Analysis

Previous: Conditions for the the

We begin our discussion by considering a simpler iteration system,

Let C be the set of all sequences  created by this iteration such that

the sequence converges to

created by this iteration such that

the sequence converges to  , i.e. we say

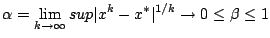

Define

, i.e. we say

Define

|

(3.11) |

We shall take liberties and call this  the asymptotic convergence factor of the

general iteration (see the references for a more sophisticated definition). For a given

iteration, the sequence will converge at least as rapidly as the sequence

the asymptotic convergence factor of the

general iteration (see the references for a more sophisticated definition). For a given

iteration, the sequence will converge at least as rapidly as the sequence  goes

to zero.

goes

to zero.

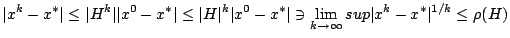

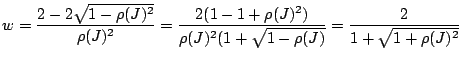

From our previous definitions,

|

(3.12) |

Here we have used that

.

We have loosly demonstrated that

The spectral radius establishes the upper bound on the rate of convergence. We thus seek to maximize the spectral radius as a to minimize the convergence time.

To begin to do so, we wish to roughly demonstrate a rather elegant lemma that says

that eigenvalues of the Jacobi iteration matrix occur in pairs (except if

there is a zero eigenvalue), which we shall denote

.

We have loosly demonstrated that

The spectral radius establishes the upper bound on the rate of convergence. We thus seek to maximize the spectral radius as a to minimize the convergence time.

To begin to do so, we wish to roughly demonstrate a rather elegant lemma that says

that eigenvalues of the Jacobi iteration matrix occur in pairs (except if

there is a zero eigenvalue), which we shall denote

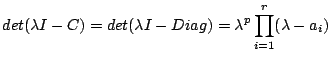

Suppose we take some random value  and want to find

and want to find

, where C has eigenvalues

, where C has eigenvalues  . C can be diagonalized

with its eigenvalues via

. C can be diagonalized

with its eigenvalues via

, whereby

, whereby

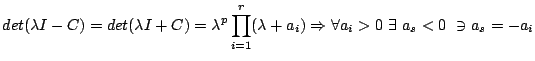

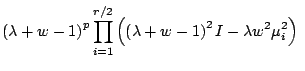

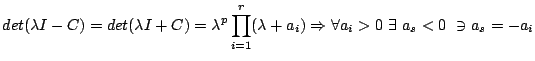

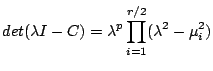

Thus, supposing the existance of p zero valued eigenvalues (p may be zero itself):

For a more thorough discussion of what is to follow, the reader is

referred to the references, especially on 2-Cyclic matrices (such as the one we are concerned with).

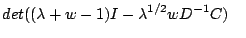

Now

may be written as

may be written as

And we note that

We can thus write that

|

(3.14) |

Thus (those with a strongly developed mathematical

sense will be unhappy with my use of thus here) we have Romanovsky's Lemma:

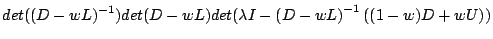

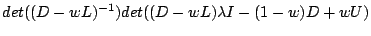

We can use this lemma to demonstrate another important detail about

form of matrices we are considering (formally called regular splittings).

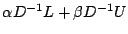

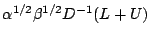

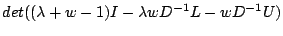

We wish to show that the eigenvalues of

are equal to those of

are equal to those of

. Let

. Let

We need to demonstrate that

That is to say, that the matrix

is consistently ordered.

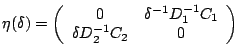

Define

And

It is clear that

Whereby in the spirit of previous arguments we have thus shown Equation 3.15 to be true.

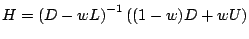

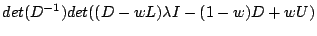

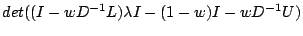

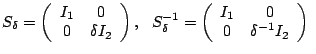

We are now ready to attack the problem head on. Recall that we defined

is consistently ordered.

Define

And

It is clear that

Whereby in the spirit of previous arguments we have thus shown Equation 3.15 to be true.

We are now ready to attack the problem head on. Recall that we defined

|

(3.16) |

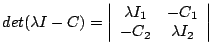

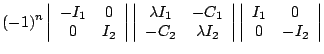

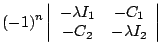

from this discussion we can reformulate this as follows:

|

|

|

|

| |

|

|

|

| |

|

|

|

| |

|

|

|

| |

|

|

|

| |

|

with with from 3.15 from 3.15 |

|

| |

|

Romanovsky's Lemma Romanovsky's Lemma |

(3.17) |

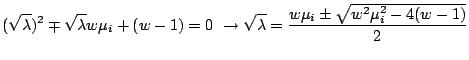

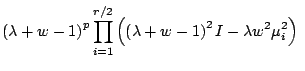

The eigenvalues of H may be found from

From this we get

Since  comes in

comes in  pairs,

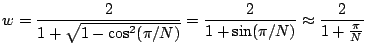

we can minimize our convergence time by choosing w such that

We are bound by the maximal

pairs,

we can minimize our convergence time by choosing w such that

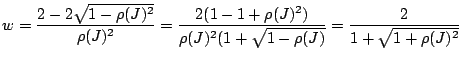

We are bound by the maximal  , the spectral radius, so that

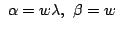

Defining

, the spectral radius, so that

Defining

and solving for w, we find

and solving for w, we find

|

(3.18) |

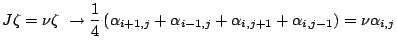

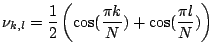

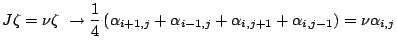

To find the spectral radius of J, we need find the eigenvectors/values of J.

The Jacobi equation is given, again, by

J is formed by stripping off the diagonal and multiplying by  Thus the eigenvalue equation gives

Thus the eigenvalue equation gives

|

(3.19) |

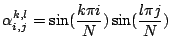

Introduce the ansatz that

where N is the dimensions of the grid (matrix, i.e. the number of rows). Plugging this into the equation above yields the equation

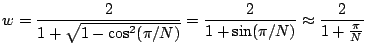

Obviously the maximum possible value will be

, this thus defining the spectral radius of concern,

where by,

, this thus defining the spectral radius of concern,

where by,

|

(3.20) |

Next: A race: Jacobi verses

Up: A More Fundamental Analysis

Previous: Conditions for the the

Timothy Jones

2006-05-15

![]() and want to find

and want to find

![]() , where C has eigenvalues

, where C has eigenvalues ![]() . C can be diagonalized

with its eigenvalues via

. C can be diagonalized

with its eigenvalues via

![]() , whereby

, whereby

![]() may be written as

may be written as

![]() are equal to those of

are equal to those of

![]() . Let

. Let

Romanovsky's Lemma

Romanovsky's Lemma